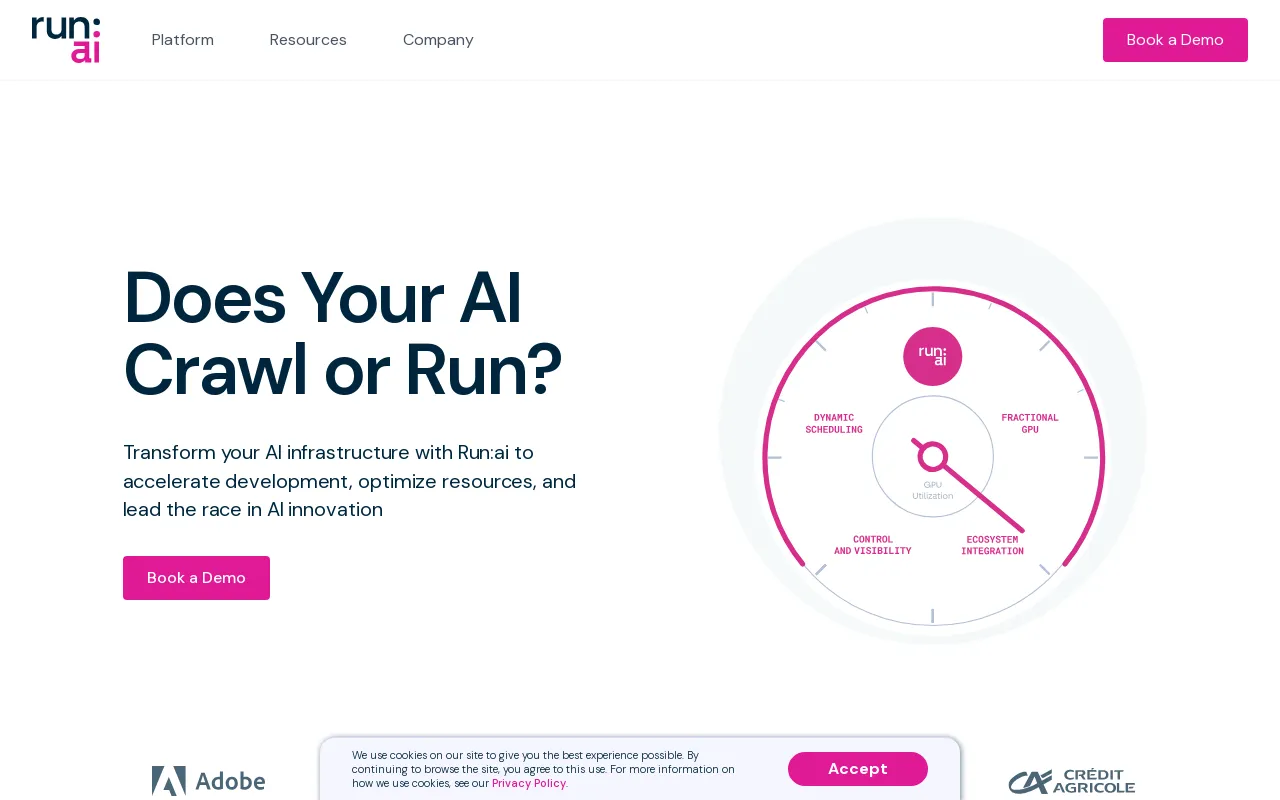

Run:ai Details

Product Information

Website

https://www.run.aiCategory

Conversational ChatbotLocation

New York, NY, USA Tel Aviv, Israel

Documentation

https://docs.run.ai/latest/Product Description

Run:ai Dev

Accelerate AI development and time-to-market

- Launch customized workspaces with your favorite tools and frameworks.

- Queue batch jobs and run distributed training with a single command line.

- Deploy and manage your inference models from one place.

Ecosystem

Boost GPU availability and multiply the Return on your AI Investment

- Workloads

- Assets

- Metrics

- Admin

- Authentication and Authorization

Run:ai API

- Workloads

- Assets

- Metrics

- Admin

- Authentication and Authorization

Run:ai Control Plane

Deploy on Your Own Infrastructure; Cloud. On-Prem. Air-Gapped.

- Multi-Cluster Management

- Dashboards & Reporting

- Workload Management

- Resource Access Policy

- Workload Policy

- Authorization & Access Control

Run:ai Cluster Engine

Meet Your New AI Cluster; Utilized. Scalable. Under Control.

- AI Workload Scheduler

- Node Pooling

- Container Orchestration

- GPU Fractioning

- GPU Nodes

- CPU Nodes

- Storage

- Network

CLI & GUI

Run:ai provides a user-friendly command-line interface (CLI) and a comprehensive graphical user interface (GUI) for managing your AI workloads and infrastructure. The CLI offers advanced control and scripting capabilities, while the GUI provides an intuitive visual experience for monitoring, configuring, and interacting with the platform.

Workspaces

Workspaces are isolated environments where AI practitioners can work on their projects. These workspaces are preconfigured with the necessary tools, libraries, and dependencies, simplifying the setup process and ensuring consistency across teams. Workspaces can be customized to meet specific project requirements and can be easily cloned or shared with collaborators.

Tools

Run:ai provides a suite of tools designed to enhance the efficiency and productivity of AI development. The tools offer features such as Jupyter Notebook integration, TensorBoard visualization, and model tracking. These tools streamline workflows, simplify data analysis, and improve collaboration among team members.

Open Source Frameworks

Run:ai supports a wide range of popular open-source AI frameworks, including TensorFlow, PyTorch, JAX, and Keras. This allows developers to leverage their preferred tools and libraries without needing to adapt their existing codebase to a specialized platform.

LLM Catalog

Run:ai offers an LLM Catalog, a curated collection of popular Large Language Models (LLMs) and their corresponding configurations. This catalog makes it easier to deploy and experiment with state-of-the-art LLMs, accelerating the development of AI applications that leverage advanced language processing capabilities.

Workloads

The Run:ai platform allows for effective management of diverse AI workloads, including:

* **Training:** Run:ai optimizes distributed training jobs, allowing you to effectively train models on large datasets across multiple GPUs.

* **Inference:** Run:ai streamlines model deployment and inference, enabling you to deploy models for real-time predictions or batch processing.

* **Notebook Farms:** Run:ai supports the creation and management of scalable notebook farms, providing a collaborative environment for data exploration and model prototyping.

* **Research Projects:** Run:ai is designed to facilitate research activities, offering a platform for experimentation with new models, algorithms, and techniques.

Assets

Run:ai allows users to manage and share AI assets, including:

* **Models:** Store and version trained models for easy access and deployment.

* **Datasets:** Store and manage large datasets for efficient use in training jobs.

* **Code:** Share and collaborate on code related to AI projects.

* **Experiments:** Track and compare the results of different AI experiments.

Metrics

Run:ai provides comprehensive monitoring and reporting capabilities, allowing users to track key metrics related to their AI workloads and infrastructure. This includes:

* **GPU utilization:** Monitor the utilization of GPUs across the cluster, ensuring efficient resource allocation.

* **Workload performance:** Track the performance of training and inference jobs, identifying bottlenecks and opportunities for optimization.

* **Resource consumption:** Monitor CPU, memory, and network usage, providing insights into resource utilization and potential optimization strategies.

Admin

Run:ai offers administrative tools for managing the platform and its users, including:

* **User management:** Control access rights and permissions for different users or groups.

* **Cluster configuration:** Configure the hardware and software resources within the AI cluster.

* **Policy enforcement:** Define and enforce resource allocation policies to ensure fairness and efficiency.

Authentication and Authorization

Run:ai provides secure authentication and authorization mechanisms to control access to resources and sensitive data. This includes:

* **Single sign-on (SSO):** Integrate with existing identity providers for seamless user authentication.

* **Role-based access control (RBAC):** Define roles with specific permissions, ensuring granular control over access to resources.

* **Multi-factor authentication (MFA):** Enhance security by requiring multiple factors for user login.

Multi-Cluster Management

Run:ai enables the management of multiple AI clusters from a central control plane. This allows organizations to:

* **Consolidate resources:** Aggregate resources across different clusters, providing a unified view of available capacity.

* **Standardize workflows:** Apply consistent policies and configurations across multiple clusters.

* **Optimize utilization:** Balance workload distribution across clusters for optimal resource allocation.

Dashboards & Reporting

Run:ai provides powerful dashboards and reporting tools to visualize key metrics, track workload performance, and gain insights into resource utilization. These capabilities include:

* **Real-time monitoring:** Track GPU utilization, workload progress, and resource usage in real time.

* **Historical analytics:** Analyze historical data to identify trends, optimize resource allocation, and improve workload performance.

* **Customizable dashboards:** Create custom dashboards tailored to specific needs and perspectives.

Workload Management

Run:ai simplifies the management of AI workloads, including:

* **Scheduling:** Automate the scheduling and execution of training and inference jobs.

* **Prioritization:** Assign priorities to workloads to ensure that critical tasks are completed first.

* **Resource allocation:** Allocate resources (GPUs, CPUs, memory) to workloads based on their needs and priorities.

Resource Access Policy

Run:ai offers a flexible resource access policy engine that allows organizations to define and enforce rules governing how users can access and utilize cluster resources. This enables:

* **Fair-share allocation:** Ensure that resources are allocated fairly among users and teams.

* **Quota management:** Set limits on resource usage to prevent overconsumption and ensure efficient allocation.

* **Priority enforcement:** Prioritize access to resources based on user roles or workload importance.

Workload Policy

Run:ai supports the creation of workload policies, defining rules and guidelines for managing AI workloads. This enables organizations to:

* **Standardize workflows:** Establish consistent workflows and best practices for running AI workloads.

* **Automate tasks:** Automate common workload management operations, such as resource allocation and scheduling.

* **Improve security:** Enforce policies to ensure compliance with security standards and regulations.

Authorization & Access Control

Run:ai employs robust authorization and access control mechanisms to secure access to resources and data, including:

* **Fine-grained permissions:** Grant specific permissions to users or groups, providing granular control over access to resources.

* **Auditing and logging:** Track user actions and access patterns, providing an audit trail for security and compliance purposes.

* **Integration with existing security tools:** Integrate Run:ai with existing security systems for centralized management and control.

AI Workload Scheduler

Run:ai's AI Workload Scheduler is specifically designed to optimize resource management for the entire AI lifecycle, enabling you to:

* **Dynamic scheduling:** Dynamically allocate resources to workloads based on current needs and priorities.

* **GPU pooling:** Consolidate GPU resources into pools, allowing for flexible allocation to diverse workloads.

* **Priority scheduling:** Ensure that critical tasks are assigned resources first, optimizing the overall throughput of the AI cluster.

Node Pooling

Run:ai introduces the concept of Node Pooling, allowing organizations to manage heterogeneous AI clusters with ease. This feature provides:

* **Cluster configuration:** Define quotas, priorities, and policies at the Node Pool level to manage resource allocation.

* **Resource management:** Ensure fair and efficient allocation of resources within the cluster, considering factors like GPU type, memory, and CPU cores.

* **Workload distribution:** Allocate workloads to appropriate Node Pools based on their resource requirements.

Container Orchestration

Run:ai seamlessly integrates with container orchestration platforms such as Kubernetes, enabling the deployment and management of distributed containerized AI workloads. This provides:

* **Automated scaling:** Scale AI workloads up or down seamlessly based on demand.

* **High availability:** Ensure that AI workloads remain available even if individual nodes fail.

* **Simplified deployment:** Deploy and manage AI workloads using containerized images, promoting portability and reproducibility.

GPU Fractioning

Run:ai's GPU Fractioning technology allows you to divide a single GPU into multiple fractions, providing a cost-effective way to run workloads that require only a portion of a GPU’s resources. This feature:

* **Increases cost efficiency:** Allows you to run more workloads on the same infrastructure by sharing GPU resources.

* **Simplifies resource management:** Streamlines the allocation of GPU resources to diverse workloads with varying requirements.

* **Improves utilization:** Maximizes the utilization of GPUs, reducing idle time and increasing efficiency.

GPU Nodes

Run:ai supports a wide range of GPU nodes from leading vendors, including NVIDIA, AMD, and Intel. This ensures compatibility with a variety of hardware configurations and allows organizations to utilize existing infrastructure or select the most suitable GPUs for their specific needs.

CPU Nodes

In addition to GPU nodes, Run:ai also supports CPU nodes for tasks that do not require GPU acceleration. This allows organizations to leverage existing CPU infrastructure or utilize more cost-effective CPU resources for specific tasks.

Storage

Run:ai integrates with various storage solutions, including NFS, GlusterFS, ceph, and local disks. This flexibility allows organizations to choose a storage solution that best meets their performance, scalability, and cost requirements.

Network

Run:ai is designed to work efficiently on high-bandwidth networks, enabling the efficient transmission of data between nodes and the execution of distributed AI workloads. Run:ai can also be deployed in air-gapped environments, where there is no internet connectivity, ensuring the security and isolation of sensitive data.

Notebooks on Demand

Run:ai's Notebooks on Demand feature enables users to launch preconfigured workspaces with their favorite tools and frameworks, including Jupyter Notebook, PyCharm, and VS Code. This:

* **Simplifies setup:** Quickly launch workspaces without the need to manually install dependencies.

* **Ensures consistency:** Provides consistent environments across teams and projects.

* **Enhances collaboration:** Share and collaborate on workspaces seamlessly with team members.

Training & Fine-tuning

Run:ai simplifies the process of training and fine-tuning AI models:

* **Queue batch jobs:** Schedule and run batch training jobs with a single command line.

* **Distributed training:** Effectively train models on large datasets across multiple GPUs.

* **Model optimization:** Optimize training parameters and hyperparameters for improved performance.

Private LLMs

Run:ai allows users to deploy and manage their own private LLMs, custom-trained models that can be used for specific applications. This enables:

* **Model deployment:** Deploy LLM models for inference and generate personalized responses.

* **Model management:** Store, version, and manage LLM models for easy access and updates.

* **Data privacy:** Keep user data confidential and secure within the organization's infrastructure.

NVIDIA & Run:ai Bundle

Run:ai and NVIDIA have partnered to offer a fully integrated solution for DGX Systems, delivering the most performant full-stack solution for AI workloads. This bundle:

* **Optimizes DGX performance:** Leverages Run:ai's capabilities to maximize the utilization and performance of DGX hardware.

* **Simplifies management:** Provides a single platform for managing DGX resources and AI workloads.

* **Accelerates AI development:** Empowers organizations to accelerate their AI initiatives with a cohesive solution.

Deploy on Your Own Infrastructure; Cloud. On-Prem. Air-Gapped.

Run:ai supports a wide range of deployment environments, offering flexible options for organizations with different infrastructure requirements. This includes:

* **Cloud deployments:** Deploy Run:ai on major cloud providers, such as AWS, Azure, and Google Cloud, allowing you to leverage their services and resources.

* **On-premises deployments:** Deploy Run:ai on your own hardware infrastructure, providing complete control over your AI environment.

* **Air-gapped deployments:** Deploy Run:ai in isolated environments with no internet connectivity, ensuring the security and integrity of your data.

Any ML Tool & Framework

Run:ai is designed to work with a wide range of machine learning tools and frameworks, including:

* **TensorFlow:** Run and manage TensorFlow workloads effectively.

* **PyTorch:** Deploy and optimize PyTorch models for training and inference.

* **JAX:** Utilize JAX for high-performance AI computations.

* **Keras:** Build and train Keras models seamlessly.

* **Scikit-learn:** Utilize Scikit-learn for machine learning tasks.

* **XGBoost:** Leverage XGBoost for gradient boosting algorithms.

* **LightGBM:** Deploy LightGBM for efficient gradient boosting.

* **CatBoost:** Utilize CatBoost for robust gradient boosting.

Any Kubernetes

Run:ai seamlessly integrates with Kubernetes, the leading container orchestration platform. This ensures compatibility with existing Kubernetes environments and allows organizations to leverage its benefits, including:

* **Automated scaling:** Scale AI workloads dynamically based on demand.

* **High availability:** Ensure that AI workloads remain available even if individual nodes fail.

* **Containerized deployments:** Deploy AI workloads as containers, promoting portability and reproducibility.

Anywhere

Run:ai is designed to be deployed anywhere, providing flexibility to organizations with diverse infrastructure needs. This includes:

* **Data centers:** Deploy Run:ai in your own data centers for maximum control and security.

* **Cloud providers:** Deploy Run:ai on major cloud providers for scalability and flexibility.

* **Edge devices:** Deploy Run:ai on edge devices for real-time AI applications.

Any Infrastructure

Run:ai supports a broad range of infrastructure components, allowing you to build your ideal AI environment:

* **GPUs:** Leverage high-performance GPUs from leading vendors, such as NVIDIA, AMD, and Intel.

* **CPUs:** Utilize CPUs for tasks that do not require GPU acceleration.

* **ASICs:** Integrate ASICs for specialized tasks, such as machine learning inference.

* **Storage:** Choose storage solutions that best meet your performance, scalability, and cost requirements.

* **Networking:** Deploy Run:ai on high-bandwidth networks for efficient data transmission and distributed workloads.

FAQFAQ

// * **Cloud:** Run:ai can be deployed on any major cloud provider including AWS, Azure, and GCP.

// * **On-Prem:** Run:ai can also be deployed on-premise, which allows organizations to keep their data and workloads secure within their own data centers.

// * **Air-Gapped:** Run:ai can also be deployed in air-gapped environments, which are environments that are not connected to the internet. This allows organizations to deploy AI workloads in environments where there are strict security requirements.//

// * **Training and deploying machine learning models:** Run:ai can be used to train and deploy machine learning models more efficiently and effectively.//

// * **Inference:** Run:ai can be used to run inference workloads on GPUs, which can help to improve the performance of AI applications.//

// * **Research and development:** Run:ai can be used to support research and development efforts by providing a powerful platform for managing and deploying AI workloads.//

// * **Deep learning:** Run:ai can be used to train and deploy deep learning models, which are becoming increasingly popular for a wide range of applications.//

//

For more information, please contact our team [email protected] We are happy to answer your questions and help you get started with Run:ai.//

Website Traffic

No Data

Alternative Products

绘AI

Image Generation

Ai Drawing

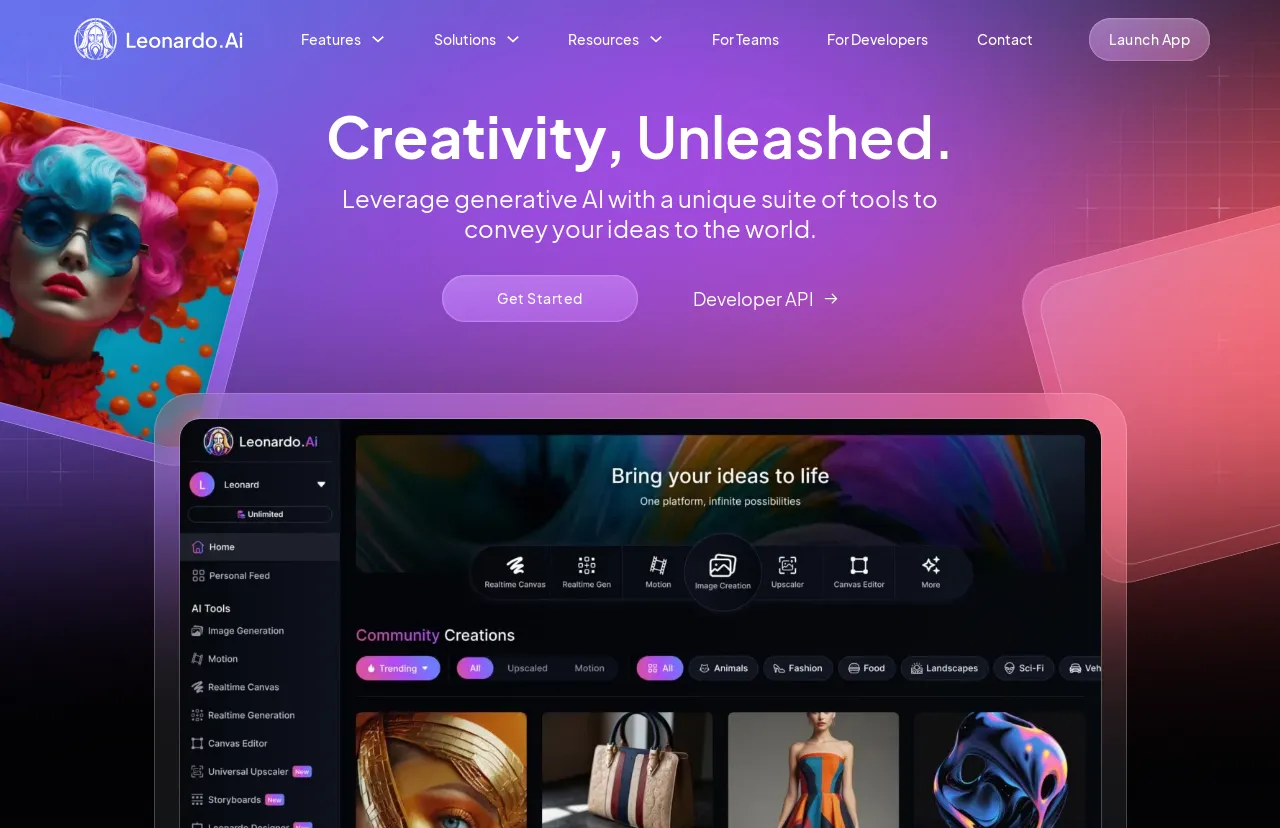

Leonardo AI

Image Generation

Creativity, Unleashed. Empowering your creative vision with generative AI.

AI Art

Image Generation

AI Graphic Creation Platform

360 AI

Image Generation

AI Creates Stunning Artwork

Stockimg AI

Image Generation

Stop wasting time on content production. Try it for free right now and manage your social media with AI!

6pen Art

Image Generation

Turn your imagination into art